This is a good solution to cover many common situations, like a summary query that runs just once a day, but consumes 15 Terabytes of memory across the cluster to complete the calculations on time and presents the summary report to a customer’s portal. The better solution is to create a dynamic Kubernetes cluster which will use the needed resources on-demand and release them when the heavy lifting is not required anymore. Since the queries which demand a peak resource capacity are not frequent, it would be a waste of resources and money to try and accommodate the maximum required capacity in a static cluster. But, it’s very expensive to keep a static datacenter of either bare metal or virtual machines with enough memory capacity to run the heaviest queries. So, it stands to reason that you want to keep as much available memory as possible for your Spark workers. The moment it spills the data to the disk during calculations, and keeps it there instead of memory, getting the query result becomes slower. It is blazing fast but only as long as you provide enough RAM for the workers. Spark is superior to Hadoop and MapReduce, primarily because of its memory re-use and caching. How is it possible to reduce cost and improve performance with Kubernetes? The short answer is autoscaling offers the cost reduction, while affinity rules boost performance. The primary benefits of this configuration are cost reduction and high performance.

We’ve created a tutorial to demonstrate the setup and configuration of a Kubernetes cluster for dynamic Spark workloads. And Kublr and Kubernetes make it easy to deploy, scale, and manage them all - in production and a stable manner with failover mechanisms and auto-recovery in case of failures.

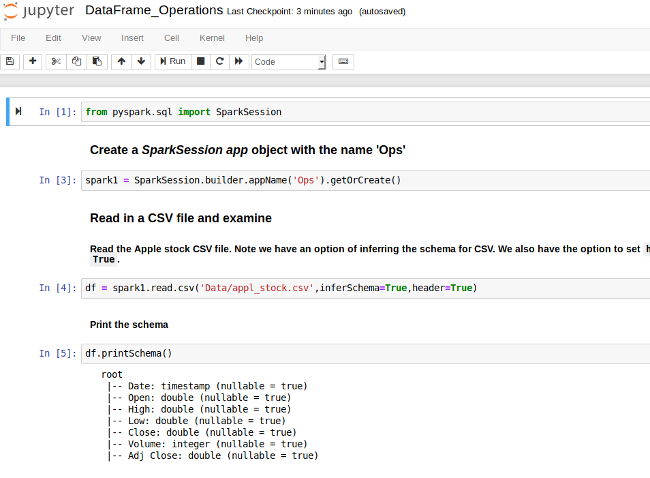

Hadoop Distributed File System (HDFS) carries the burden of storing big data Spark provides many powerful tools to process data while Jupyter Notebook is the de facto standard UI to dynamically manage the queries and visualization of results. Kublr and Kubernetes can help make your favorite data science tools easier to deploy and manage.

0 kommentar(er)

0 kommentar(er)